block that looks up the weather forecast for your location and tells you whether or not to carry an umbrella or a block that reports the current temperature for a certain location:

block that looks up the weather forecast for your location and tells you whether or not to carry an umbrella or a block that reports the current temperature for a certain location:

What is the point of this page (from a students' perspective)? It seems arbitary to me. (I can see that it's introducing data scraping.) I'll try to think of some mini-projecty thing to do here, but please feel free to leave an idea... --MF

Idea from Bowen: Preview GPS for easy first part (GPS-NYC.csv Unit 4 Lab 4: GPS Forensics) and Weather app for unlucky hard part below (Unit 4 Lab 2: Weather App).

The Internet is full of information that you can use in your programs. For example, you will write an  block that looks up the weather forecast for your location and tells you whether or not to carry an umbrella or a block that reports the current temperature for a certain location:

block that looks up the weather forecast for your location and tells you whether or not to carry an umbrella or a block that reports the current temperature for a certain location:

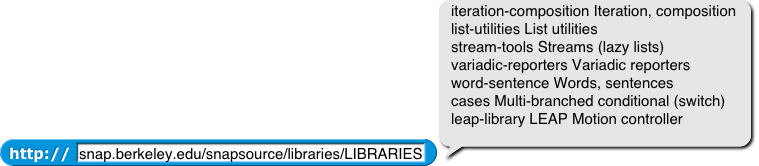

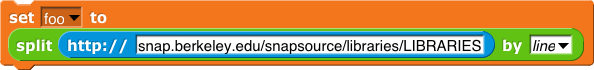

We want a list of all the available libraries of Snap! blocks.

Sometimes you're lucky, and a URL points directly to a data file:

split block in the Operators palette, and experiment with the different options for the second input slot. Read the help screen (right-click on a split block and choose "Help") if you need ideas.

scrape block in this project is an attempt to scrape just the file names from the libraries web page. It almost works, but it has a couple of problems.

scrape and make sure you understand how it works. It uses helper blocks

scrape so that you get a complete list of files and nothing else.scrape block on snap.berkeley.edu/snapsource/Costumes to make sure it can work on any file directory. ("Directory" is the technical term for what users call a "folder": a collection of files.)