-

The factorial of a positive integer n (written "n !") is the product of all the integers from 1 to n. For example:

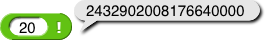

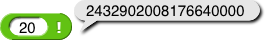

5! = 1 \times 2 \times 3 \times 4 \times 5 = 120 Load this project, and try out these inputs:

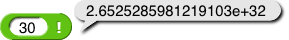

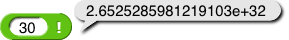

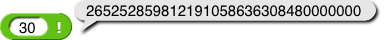

Huh? Apparently 30! is a decimal fraction, 2.65..., and what's that "e+32" at the end?

What you're seeing is scientific notation. The "e" means "times ten to the power," so this notation means

2.6525285981219103 × 10 32 = 265252859812191030000000000000000

So why did Snap! display 20! in ordinary whole number representation, just a string of digits, but 30! in scientific notation? You already know that computers use bits to store information, including numbers. The new thing for you to learn is that every computer model is designed with a certain width, the number of bits that the processor reads from memory or writes into memory at a time. The object composed of that number of bits is called a word. As we write this in 2016, most new computers are 64 bits wide—that is, they have 64 bit words—but there are still a lot of 32-bit computers around. The first microcomputer, the Intel 4004, first sold in 1971, was just 4 bits wide!

If you got an answer in scientific notation for 20!, you're using a 32-bit computer.

Processor widths don't have to be a power of two. Some old computers—the kind you see in old movies that filled a large room—used 12-bit, 36-bit, and 60-bit words. But modern personal computers started at 8 bits and the widths have been doubling with each new generation.

On a 64-bit computer, if you want to represent both positive and negative integers, one word can fit 263=9223372036854775808 of each (264 nunbers altogether). 263 is about 9 quintillion, 9×1018. That means that the 19-digit value of 20! just fits in a word, but the 33-digit 30! doesn't.

All computers have a finite amount of memory. So it's not a surprise that there's a limit to the size of integers a computer can represent exactly. But that limit is way more than 64 bits; your computer probably has 137 billion or more bits of memory. Why can't programming languages just use more than one word if necessary to represent an integer?

They can. It's just that the language that computers "natively" know adds one-word numbers with a single machine intruction, whereas the person who designs the programming language that we write in must work a little harder to make "add" work with multiple-word values. (A multiple-word integer value is called a bignum.) The best programming languages do that; remember that the whole point of computer science is abstraction, and one important kind of abstraction is protecting the user from having to know about hardware limitations. Alas, all the languages you are most likely to have heard of limit integer values to what fits in a word.

The paradigmatic example of a great programming language is Scheme. You can learn it from a wonderful computer science book (in our opinion, the best ever written).

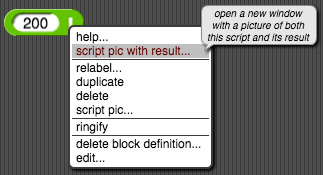

! block.

What's up with that? We've already seen that 200!, although it's very large, isn't infinite. Computers are perfectly capable of handling it. As you might guess, this is also the result of a size limitation. The floating point representation that computers use for non-integers (and, in a non-bignum language, integers that are too big for the integer representation) can only represent numbers up to about 10308. But 200! is about 8×10374 (as you should know if you did problem 7). If the result of a computation is bigger than that, floating point hardware returns a special code that represents Infinity.

Floating point is essentially a binary version of scientific notation. (Click for details.)

Most real numbers, not just especially large ones, are not exactly representable in this format. Think about the decimal representation of 1/3, 0.33333... It has infinitely many digits, so the closest you can come in floating point isn't exactly 1/3. That's why even great programming languages set a limit to the number of digits representable in floating point notation.

On the other hand, fractions such as 1/3 can be represented exactly using a pair of integers, one for the numerator and one for the denominator.

with bignums off and with bignums on.

with bignums off and with bignums on.Here's the binary representation of 20! in 64-bit integer format:

0010000111000011011001110111110001000010101101000000000000000000

As an integer, that pattern of bits represents 243290200817664000. But that same pattern of bits represents

4.85611351839403586987046017196×10-146

when considered as a floating point representation. The same pattern of bits may also represent an instruction in the machine language of whatever computer you're using. This is so important we're going to say it in boldface: The meaning of a sequence of bits depends on the context in which it is used. That's the take-away understanding you should get from this page, after you've forgotten details such as how many bits there are in a word.

What exactly do we mean by "context"? How does a programming language know whether to interpret a bit sequence as an integer, a float, a string of characters, an instruction, or something else? Here, too, programming languages differ. There's always another bit sequence somewhere that encodes the data type of the bit sequence. In great languages, that data type code is attached to the value itself. In ordinary languages, when you make a variable, you have to say what type of value it will contain, and the data type is attached to the variable, so you can't get exact answers when the values are integers and also be able to handle non-integer values of the same variable. So instead of seeing

you see things like

We're telling you all these things because Snap! has strengths that many programming languages do not, and it's very likely that your next year's computer science class will use one of those other languages. For example, if you take the AP Computer Science A course, it will use Java.

false and 1 for true.

A character string is a sequence of Unicode character codes, each of which is a small integer—

a sequence of bits. Lists and procedures, too, are binary sequences, but the precise format is beyond the scope of this course.