- For example, try

once with bignums on and once with bignums off.

once with bignums on and once with bignums off.

BH: Keep... But promote TIF A to a FYTD.

MF: lightly clean up to make the text more concise; do we really need to teach to this depth?

On this page, you'll learn how computers store numbers that are not integers.

The way computers store numbers that are not integers is called floating point.

Scientific notation (such as 2,350,000 = 2.35 × 106) uses powers of ten to represent very large or very small values. Floating point is the same idea but with powers of two.

quiz item, etc.). But in some languages, you have to declare what kind of data your variables will store. If you have a collection of values, it's obvious that you'll need a list to store it. But it's not always obvious how best to represent a value in a computer program. For example, the most straightforward way to represent an amount of money is in floating point because it allows for decimals. But if you use floating point to store a value like 3.20, it's likely to be displayed to the user as 3.2, and people rarely write money this way. Instead, even though it's more programming effort, you might choose to create an abstract data type money, dollars: ( ) cents: ( ) with two integer inputs so that you can control how the value is displayed.

Floating point allows computers to store very large numbers and also decimals, but the format still has a specific number of bits, and that limits the range of floating point values and mathematical operations just as with integers. However with floating point, values that exceed the limitation may result in round off errors instead.

once with bignums on and once with bignums off.

once with bignums on and once with bignums off.The decimal representation of ⅓ is 0.33333... It has infinitely many digits, so the closest you can come in floating point isn't exactly ⅓; it gets cut off after a while because your computer doesn't have enough memory.

Roundoff errors can result in some pretty non-intuitive results...

and then try

and then try  .

.This isn't a bug in Snap!, which is correctly reporting the result computed by the floating point hardware.

No matter how good the hardware is, certain kinds of computations are likely to give severe errors in floating point. One simple example is subtracting two numbers that are almost equal in value. The correct answer will be near zero, and if it's near enough, it will underflow and an exact zero might be reported.

A notorious example is the fate of the Ariane rocket launched on June 4, 1996 (European Space Agency 1996). In the 37th second of flight, the inertial reference system attempted to convert a 64-bit floating-point number to a 16-bit number, but instead triggered an overflow error which was interpreted by the guidance system as flight data, causing the rocket to veer off course and be destroyed.

The Patriot missile defense system used during the Gulf War was also rendered ineffective due to roundoff error (Skeel 1992, U.S. GAO 1992). The system used an integer timing register which was incremented at intervals of 0.1 s. However, the integers were converted to decimal numbers by multiplying by the binary approximation of 0.1, 0.000110011001100110011002 = 209715/2097152.

As a result, after 100 hours (3.6 × 106 ticks), an error of

(\frac{1}{10}-\frac{209715}{2097152})(3600\times100\times10)=\frac{5625}{16384} \approx 0.3433 \text{ seconds}

had accumulated. This discrepancy caused the Patriot system to continuously recycle itself instead of targeting properly. As a result, an Iraqi Scud missile could not be targeted and was allowed to detonate on a barracks, killing 28 people.

From Analog and Digital Conversion, by Wikibooks contributors, https://en.wikibooks.org/wiki/Analog_and_Digital_Conversion/Fixed_Wordlength_Effects

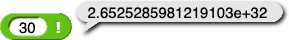

Computer arithmetic on integers is straightforward. Either you get an exactly correct integer result or, if the result won't fit in (non-bignum) integer representation, you get an overflow error and the result is, usually, converted to floating point representation (as 30! was).

By contrast, computer arithmetic on floating point numbers is hard to get exactly right. Prior to 1985, every model of computer had a slightly different floating point format, and all of them got wrong answers to certain problems. This situation was resolved by the IEEE 754 floating point standard, which is now used by every computer manufacturer and has been improved several times since it was created in 1985.

With bignums turned off, when a result is too large to be an integer, it is converted to floating point.

What's going on? Although 200! is very large, it's not "infinity." This report is caused by the size limitation of the floating point format. If the result of a computation is bigger than than the range of numbers that can be stored, then the computer returns a special code that languages print as Infinity or ∞.

How does a programming language know whether to interpret a bit sequence as an integer, a floating point, a text string of Unicode characters, an instruction, or something else?

false and 1 for true.

In well-designed languages (those based on Scheme, for example), that data type code is attached to the value itself. In other languages, when you make a variable, you have to say what type of value it will contain, and the data type is attached to the variable, so you can't both get exact answers when the values are integers and also be able to handle non-integer values of the same variable. So instead of seeing:

you see things like:

In a language with dynamic typing (where you don't have to declare the types of variables) it's just as easy to make a list whose items are of different data types as it is to make one whose items are all the same type (all integers or all character strings, etc.)

Snap! has strengths that many programming languages do not, and it's very likely that your next computer science class will use one of those other languages.

Al, I think you must have switched between bignums-on and bignums-off between trials. With bignums on I get correct exact rational answers for both. --bh