Parallelism

On this page, you will learn how running multiple scripts in parallel can reduce the total time it takes to run an algorithm.

In Snap!, you are accustomed to seeing a bunch of scripts that all run independently, which may or may not be associated with different sprites. This is kind of like parallel computing. So, if we had a different computer for each sprite, that would be true parallelism. As it is, there is only one computer, and it divides its attention among the processes by running a little bit of one and then running a little bit of the next one. Specifically, it switches at the bottom of loops (forever, repeat, etc.).

:

Sequential Computing vs.

Parallel Computing

CSN-2.A.1, CSN-2.A.2

This section covers two computational models:

- In sequential computing, operations are performed in order one at a time.

- In parallel computing, the program is broken into smaller steps, some of which are performed at the same time. Modern computers have multiple processors (2, 4, or 8) in a single computer, so you can do small-scale parallel processing on the machine on your desk.

CSN-2.A.4

You can compare the efficiency of two different algorithmic solutions to a problem by comparing the time it takes them to perform the same task.

-

CSN-2.A part b, CSN-2.A.5

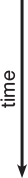

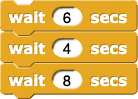

How long will this

sequential program take to run?

8

A sequential solution takes as long as the sum of the run times of all of its steps.

4

A sequential solution takes as long as the sum of the run times of all of its steps.

6

A sequential solution takes as long as the sum of the run times of all of its steps.

CSN-2.A.5

The run time of a sequential algorithm is the sum of the run times of all its steps.

-

CSN-2.A part b, CSN-2.A.6

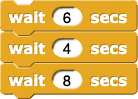

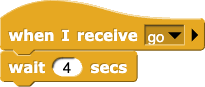

How long will this

parallel program take to run?

18

The two when I receive tasks happen in parallel, not one after the other.

8

The longest parallel time does matter, but it's not the only thing that contributes to the total time.

6

Broadcast and wait waits until all the tasks that it started have finished.

CSN-2.B.1, CSN-2.B.2, CSN-2.B.3, CSN-2.B.4

:

Distributed Computing

CSN-2.A.3

Distributed computing is a form of parallel computing that uses multiple computers (perhaps even spread out around the world).

Writing a program that does nothing but wait is, of course, unrealistic, but what is realistic is that in most problems, there isn't a solution that's purely parallel. Some part of the computation has to be done sequentially. In the previous question, the sequential part is modeled by wait 6 secs. Parallelization with this silly example feels trivial, but imagine you work for Google. Millions of search queries and web page edits have happened today, and it's your job to have to process them. If they didn't have huge server farms with thousands of computers in each building, they couldn't keep up at all. Distributed computing lets you scale to very large problems.

CSN-2.A.6

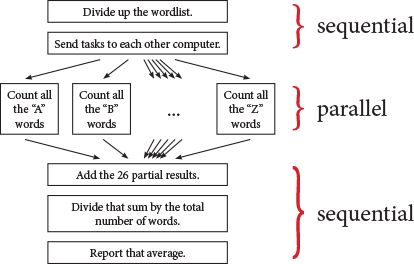

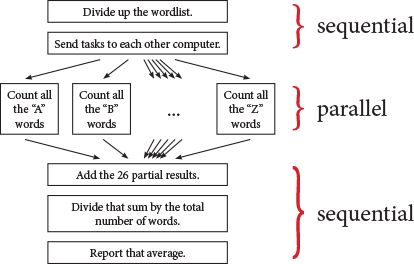

As a more specific example, suppose you want to know the average word length in list of 100,000 words. You can divide the task among several computers (one for each starting letter). Each computer adds the lengths of all the words assigned to it (all the "A" words, all the "B" words, etc). Then one computer has to add the 26 partial results and divide by the total number of words to find the average. To calculate the run time of this parallel solution, you would add the run time of the longest parallel portion (the run time for the letter with the most words) to the run time of the sequential portion (adding the 26 partial results and dividing the sum by the total number of words).

As a more specific example, suppose you want to know the average word length in list of 100,000 words. You can divide the task among several computers (one for each starting letter). Each computer adds the lengths of all the words assigned to it (all the "A" words, all the "B" words, etc). Then one computer has to add the 26 partial results and divide by the total number of words to find the average. To calculate the run time of this parallel solution, you would add the run time of the longest parallel portion (the run time for the letter with the most words) to the run time of the sequential portion (adding the 26 partial results and dividing the sum by the total number of words).

Because every computation includes a sequential portion, there is a limit to how much you can speed up a task by adding processors.

:

Processor

A processor is a piece of circuitry inside a computer that processes the instructions from computer programs.

Image credit: Wikipedia user Solipsist

CSN-2.A parts a and b, CSN-2.A.4, CSN-2.B.5

-

Suppose a task includes one minute of sequential steps and a parallelizable portion that would take an hour if done sequentially. Fill in this table:

| Number of Processors |

Total Time Required |

Solution Type |

| 1 |

61 minutes (1 hr + 1 min) |

sequential solution |

| 2 |

31 minutes (30 min + 1 min) |

parallel solutions |

| 3 |

|

| 4 |

|

| 10 |

|

| 20 |

|

| 30 |

|

| 60 |

|

| 120 |

|

| 240 |

|

| 480 |

|

CSN-2.B.5

- Consider the law of diminishing returns:

- If you have one processor and you add one more, how much time do you save?

- If you have 240 processors and you add 240 more, how much time do you save?

How many processors do you think are worth having for this problem?

How many processors do you think are worth having for this problem?

What is the law of diminishing returns?

The law of diminishing returns is an idea from economics that basically says that after a certain point, more is not better. For example, if you got five birthday presents, you might be happier than if you got only one, but getting more presents isn't necessarily better. Imagine you received a hundred presents. You'd get bored opening them and probably feel overwhelmed. And getting a thousand or a million presents wouldn't make your birthday any happier. The same rule applies to birthday cake!

:

Speedup

CSN-2.A.7

Programmers refer to the speedup of parallel solution to describe how many times as fast the parallel solution is compared to the sequential solution:

\text{speedup} = \frac{\text{sequential time}}{\text{parallel time}}

Computer hardware is very reliable; it's rare for the hardware to break down while you're running a program. But in distributed computing with tens of thousands of computers working on the same problem, it's likely that one of them will fail. (If the probability of one computer failing during a program run is 0.0001, and you use 10,000 computers, then the probability of one of them failing is about 0.368—about a third of the time!) So software has to compensate by checking that the machines are running and reassigning the tasks of a failed computer to a working one.

Also, when you are doing things in parallel, the parallel processes can interfere with each other.

An example of interfering processes with banking...

If one person withdraws money from an ATM at the same time as someone at a different ATM withdraws money from the same account, you could run into a situation like this:

|

ATM 1 |

ATM 2 |

| looks up the balance ($100) |

|

|

looks up the balance ($100) |

|

checks if the amount requested ($40) is available |

|

adjusts the balance ($60) |

|

dispenses the money |

| check if the amount requested ($20) is available |

|

| adjusts the balance ($80) |

|

| dispenses the money |

|

Because ATM 2 changed the account balance after ATM 1 looked up the balance, ATM 1 didn't know that the balance had been changed. The bank account ended up a balance of $80 even though it started at $100 and a total of $60 was withdrawn.

For these reasons and others, a parallel program is harder to write and debug than a sequential program.

CSN-2.B

-

Identify some benefits and potential challenges of parallel and distributed computing.

Identify some benefits and potential challenges of parallel and distributed computing.

-

\frac{14}{18}

Speedup is calculated by dividing the sequential time by the parallel time.

\frac{18}{6}

Broadcast and wait waits until all the tasks that it started have finished.

\frac{18}{8}

The longest parallel time does matter, but it's not the only thing that contributes to the total parallel time.

18Correct!8A sequential solution takes as long as the sum of the run times of all of its steps.4A sequential solution takes as long as the sum of the run times of all of its steps.6A sequential solution takes as long as the sum of the run times of all of its steps.

18Correct!8A sequential solution takes as long as the sum of the run times of all of its steps.4A sequential solution takes as long as the sum of the run times of all of its steps.6A sequential solution takes as long as the sum of the run times of all of its steps.

As a more specific example, suppose you want to know the average word length in list of 100,000 words. You can divide the task among several computers (one for each starting letter). Each computer adds the lengths of all the words assigned to it (all the "A" words, all the "B" words, etc). Then one computer has to add the 26 partial results and divide by the total number of words to find the average. To calculate the run time of this parallel solution, you would add the run time of the longest parallel portion (the run time for the letter with the most words) to the run time of the sequential portion (adding the 26 partial results and dividing the sum by the total number of words).

As a more specific example, suppose you want to know the average word length in list of 100,000 words. You can divide the task among several computers (one for each starting letter). Each computer adds the lengths of all the words assigned to it (all the "A" words, all the "B" words, etc). Then one computer has to add the 26 partial results and divide by the total number of words to find the average. To calculate the run time of this parallel solution, you would add the run time of the longest parallel portion (the run time for the letter with the most words) to the run time of the sequential portion (adding the 26 partial results and dividing the sum by the total number of words).