In one sense, any architecture can be a hobbyist architecture. Even back in the days of million-dollar computers, there were software hobbyists who found ways to get into college computer labs, often by making themselves useful there. Today, there are much more powerful computers that are cheap enough that hobbyists are willing to take them apart. But there are a few computer architectures specifically intended for use by hobbyists.

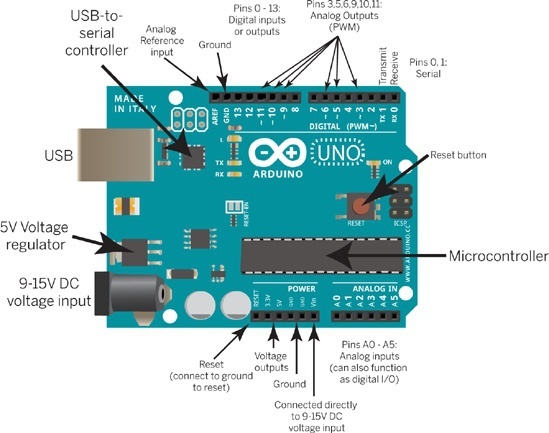

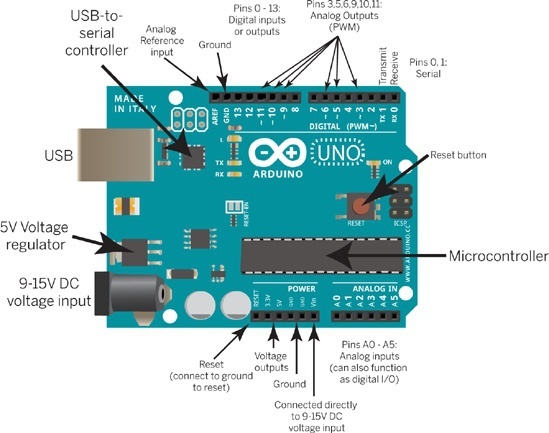

By far the most popular computer specifically for hobbyists is the Arduino. It's a circuit board, not just a processor. Around the edges of the board are connectors. On the short edge on the left in the picture are the power input, which can connect to a power supply plugged into the wall or to a battery pack for a mobile device such as a robot, and a USB connector used mainly to download programs from a desktop or laptop computer. On the long edges are connectors for single wires connected to remote sensors (for light, heat, being near a wall, touching another object, etc.) or actuators (stepping motors, lights, buzzers, etc.).

One important aspect of the Arduino design is that it's free ("free as in freedom"). Anyone can make and even sell copies of the Arduino. This is good because it keeps the price down (the basic Arduino Uno board costs $22) and encourages innovation, but it also means that there can be incompatible Arduino-like boards. (The name "Arduino" is a trademark that can be used only by license from Arduino AG.)

The processor in most Arduino models is an eight-bit RISC system with memory included in the chip, called the AVR, from a company called Atmel. It was designed by two (then) students in Norway, named Alf-Egil Bogen and Vegard Wollan. Although officially "AVR" doesn't stand for anything, it is widely believed to come from "Alf and Vegard's RISC." There are various versions of the AVR processor, with different speeds, memory capacities, and of course prices; there are various Arduino models using the different processors.

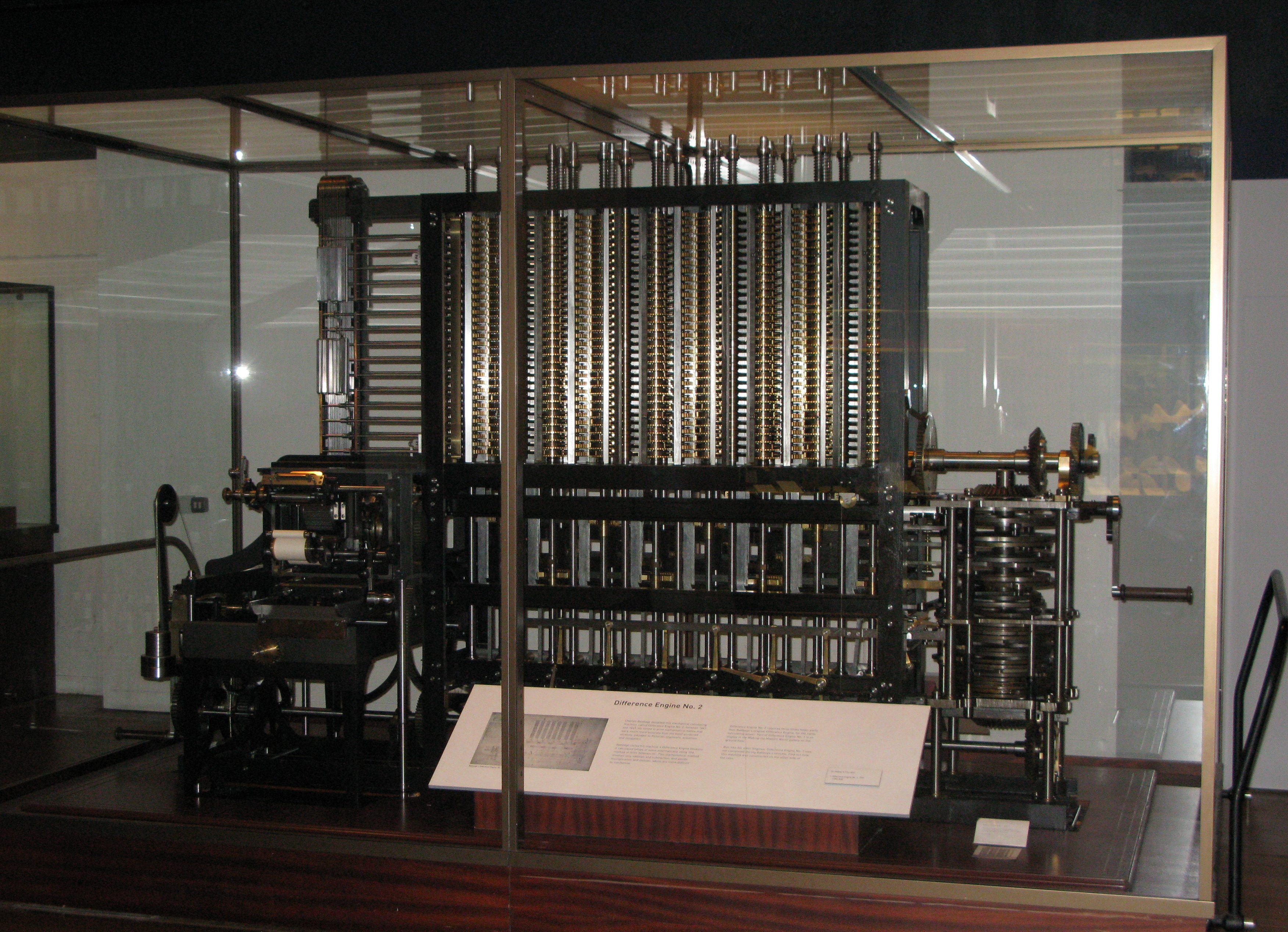

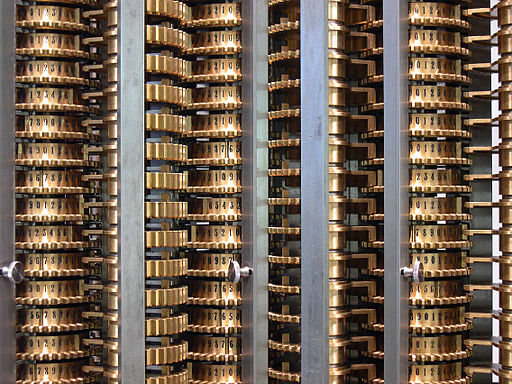

Unlike most ("von Neumann architecture") computers, the AVR ("Harvard architecture") separates program memory from data memory. (It actually has three kinds of memory, one for the running program, one for short-term data, and one for long-term data.) Babbage's Analytical Engine was also designed with a program memory separate from its data memory.

Why would you want more than one kind of memory?

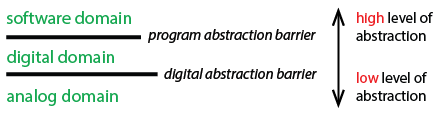

There are actually two different design issues at work in this architecture. One is all the way down in the analog domain, having to do with the kind of physical circuitry used. There are many memory technologies, varying in cost, speed, and volatility: volatile memory loses the information stored in it when the device is powered off, while non-volatile memory retains the information. Here's how memory is used in the AVR chips:

-

EEPROM (512 Bytes–4kBytes) is non-volatile, and is used for very long term data, like a file in a computer's disk, except that there is only a tiny amount available. Programs on the Arduino have to ask explicitly to use this memory, with an EEPROM library.

- The name stands for Electrically Erasable Programmable Read-Only Memory, which sounds like a contradiction in terms. In the early days of transistor-based computers, there were two kinds of memory, volatile (Random Access Memory, or RAM) and nonvolatile (Read-Only Memory, or ROM). The values stored in an early ROM had to be built in by the manufacturer of the memory chip, so it was expensive to have a new one made. Then came Programmable Read-Only Memory (PROM), which was read-only once installed in a computer, but could be programmed, once only, using a machine that was only somewhat expensive. Then came EPROM, Erasable PROM, which could be erased in its entirety by shining a bright ultraviolet light on it, and then reprogrammed like a PROM. Finally there was Electrically Erasable PROM, which could be erased while installed in a computer, so essentially equivalent to RAM, except that the erasing is much slower than rewriting a word of RAM, so you use it only for values that aren't going to change often.

-

SRAM (1k–4kBytes): This memory can lose its value when the machine is turned off; in other words, it's volatile. It is used for temporary data, like the script variables in a Snap! script.

- The name stands for Static Random Access Memory. The "Random Access" part differentiates it from the magnetic tape storage used on very old computers, in which it took a long time to get from one end of the tape to another, so it was only practical to write or read data in sequence. Today all computer memory is random access, and the name "RAM" really means "writable," as opposed to read-only. The "Static" part of the name means that, even though the memory requires power to retain its value, it doesn't require periodic refreshing as regular ("Dynamic") computer main memory does. ("Refreshing" means that every so often, the computer has to read the value of each word of memory and rewrite the same value, or else it fades away. This is a good example of computer circuitry whose job is to maintain the digital abstraction, in which a value is zero or one, and there's no such thing as "fading" or "in-between values.") Static RAM is faster but more expensive than dynamic RAM; that's why DRAM is used for the very large (several gigabytes) memories of desktop or laptop computers.

-

Flash memory (16k–256kBytes): This is the main memory used for programs and data. Flash memory is probably familiar to you because it's used for the USB sticks that function as portable external file storage. It's technically a kind of EEPROM, but with a different physical implementation that makes it much cheaper (so there can be more of it in the Arduino), but more complicated to use, requiring special control circuitry to maintain the digital abstraction.

- "More complicated" means, for example, that changing a bit value from 1 to 0 is easy, but changing it from 0 to 1 is a much slower process that involves erasing a large block of memory to all 1 bits and then rewriting the values of the bits you didn't want to change.

So, that's why there are physically different kinds of memory in the AVR chips, but none of that completely explains the Harvard architecture, in which memory is divided into program and data, regardless of how long the data must survive. The main reason to have two different memory interface circuits is that it allows the processor to read a program instruction and a data value at the same time. This can in principle make the processor twice as fast, although that much speed gain isn't found in practice.

To understand the benefit of simultaneous instruction and data reading, you have to understand that processors are often designed using an idea called pipelining. The standard metaphor is about doing your laundry, when you have more than one load. You wash the first load, while your dryer does nothing; then you wash the second load while drying the first load, and so on until the last load. Similarly, the processor in a computer includes circuitry to decode an instruction, and circuitry to do arithmetic. If the processor does one thing at a time, then at any moment either the instruction decoding circuitry or the arithmetic circuitry is doing nothing. But if you can read the next instruction at the same time as carrying out the previous one, all of the processor is kept busy.

This was a long explanation, but it's still vastly oversimplified. For one thing, it's possible to use pipelining in a von Neumann architecture also. And for another, a pure Harvard architecture wouldn't allow a computer to load programs for itself to execute. So various compromises are used in practice.

Atmel has since introduced a line of ARM-compatible 32-bit processors, and Arduino has boards using that processor but compatible with the layout of the connectors on the edges.

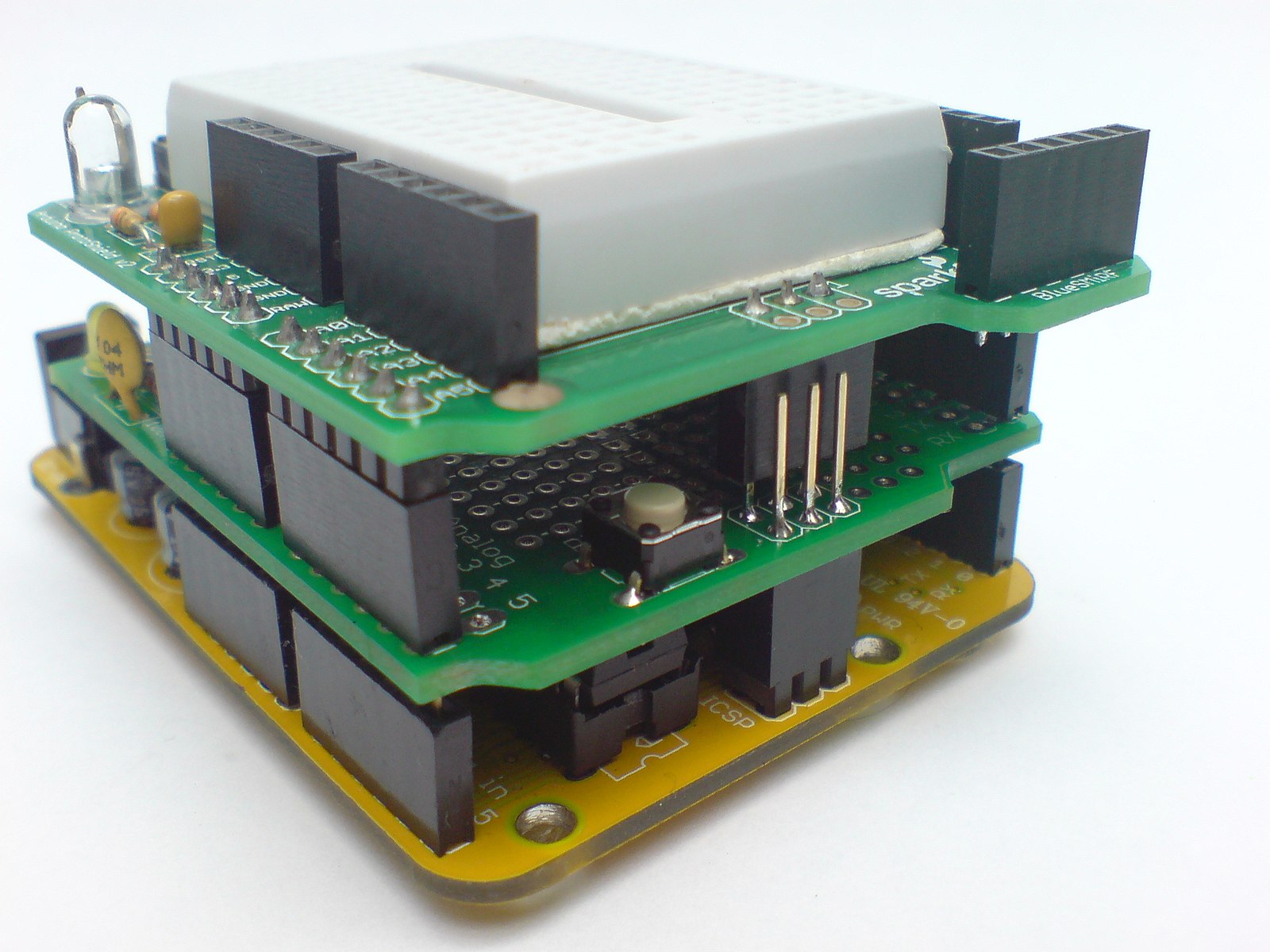

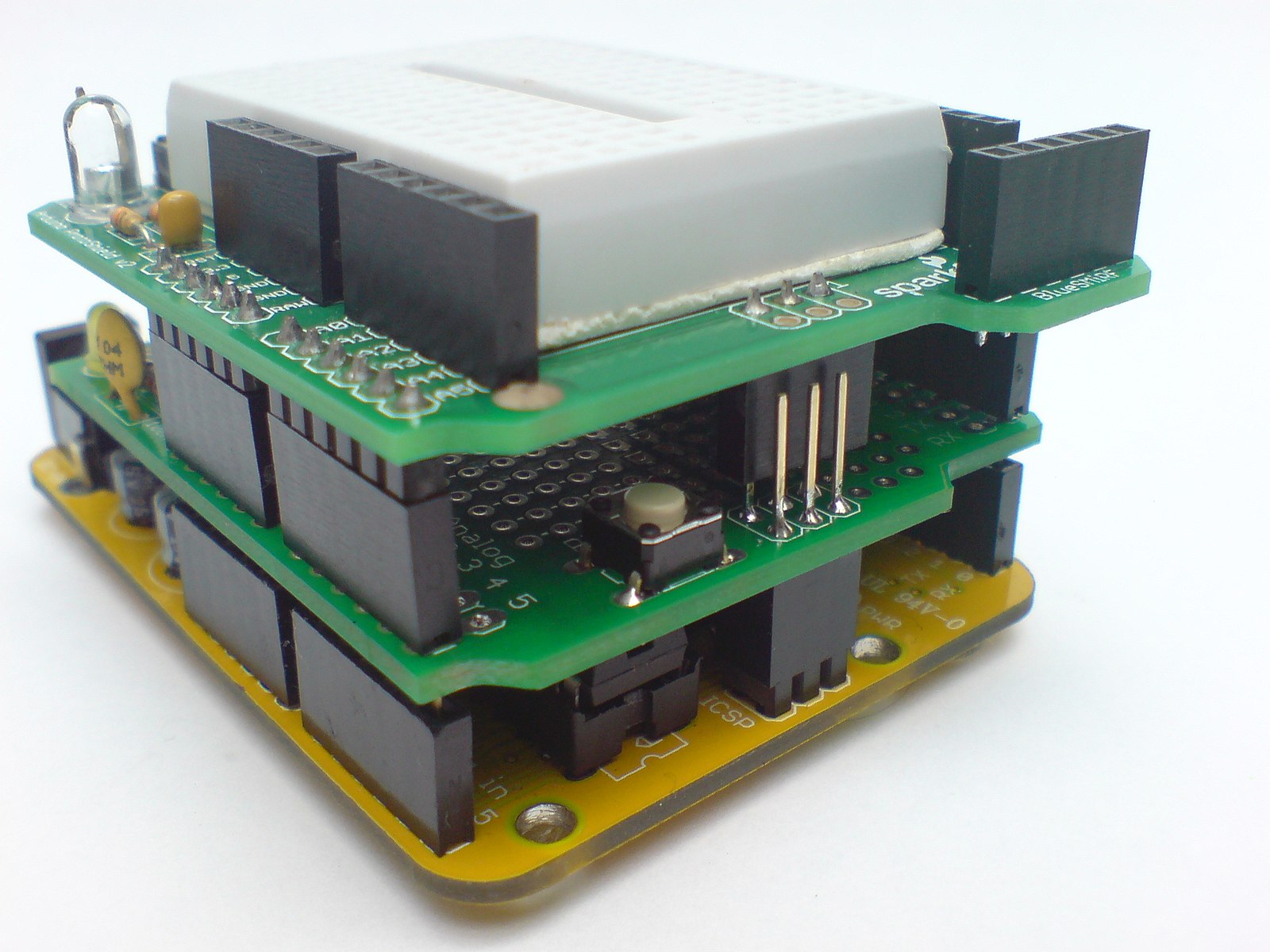

One thing that has contributed to the popularity of the Arduino with hobbyists is the availability of shields, which are auxiliary circuit boards that plug into the side edge connectors and have the same connectors on their top side. Shields add features to the system. Examples are motor control shields, Bluetooth shields for communicating with cell phones, RFID shields to read those product tags you find inside the packaging of many products, and so on. Both the Arduino company and others sell shields.

Stack of Arduino shields

Image by Wikimedia user Marlon J. Manrique, CC-BY-SA 2.0.

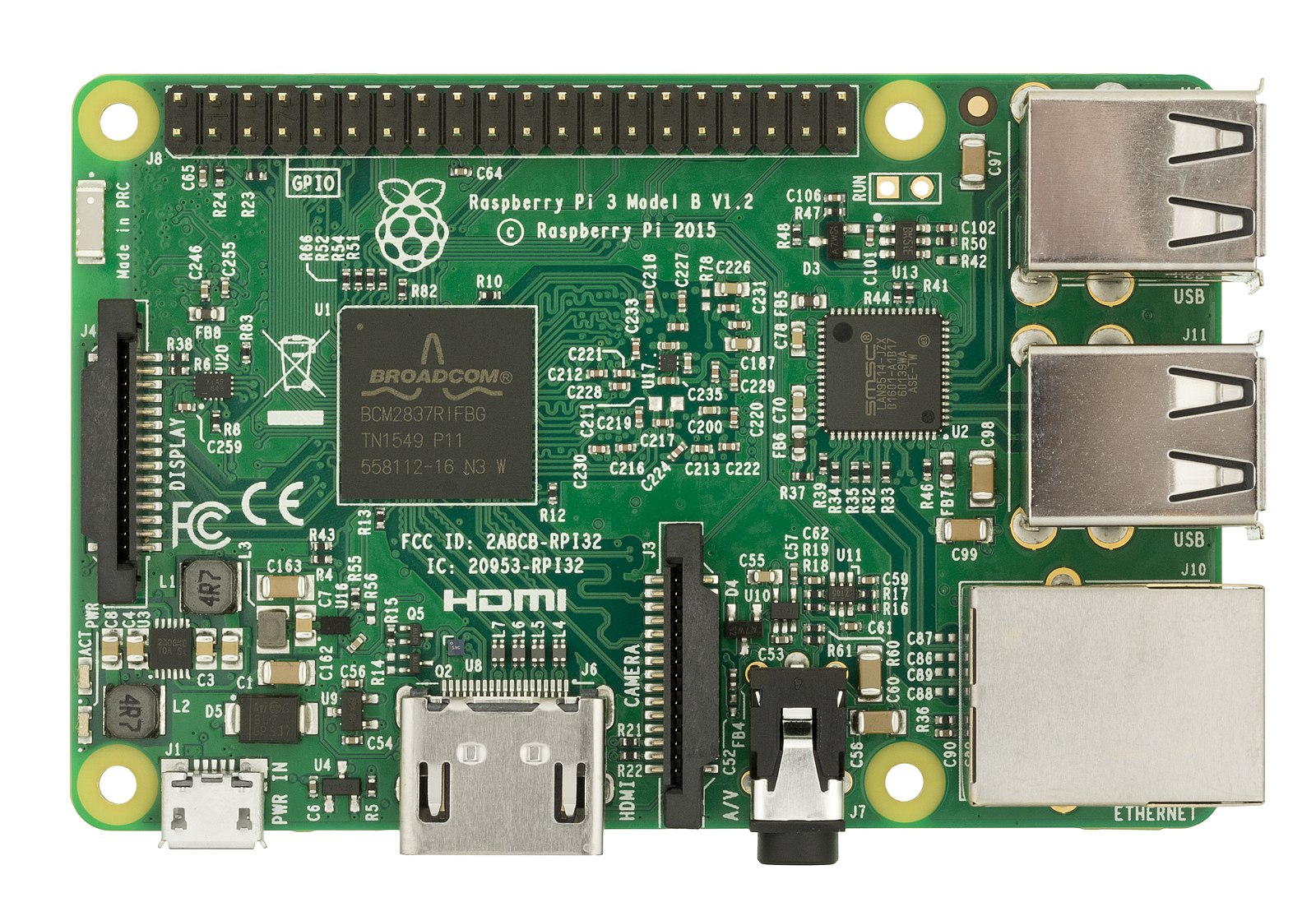

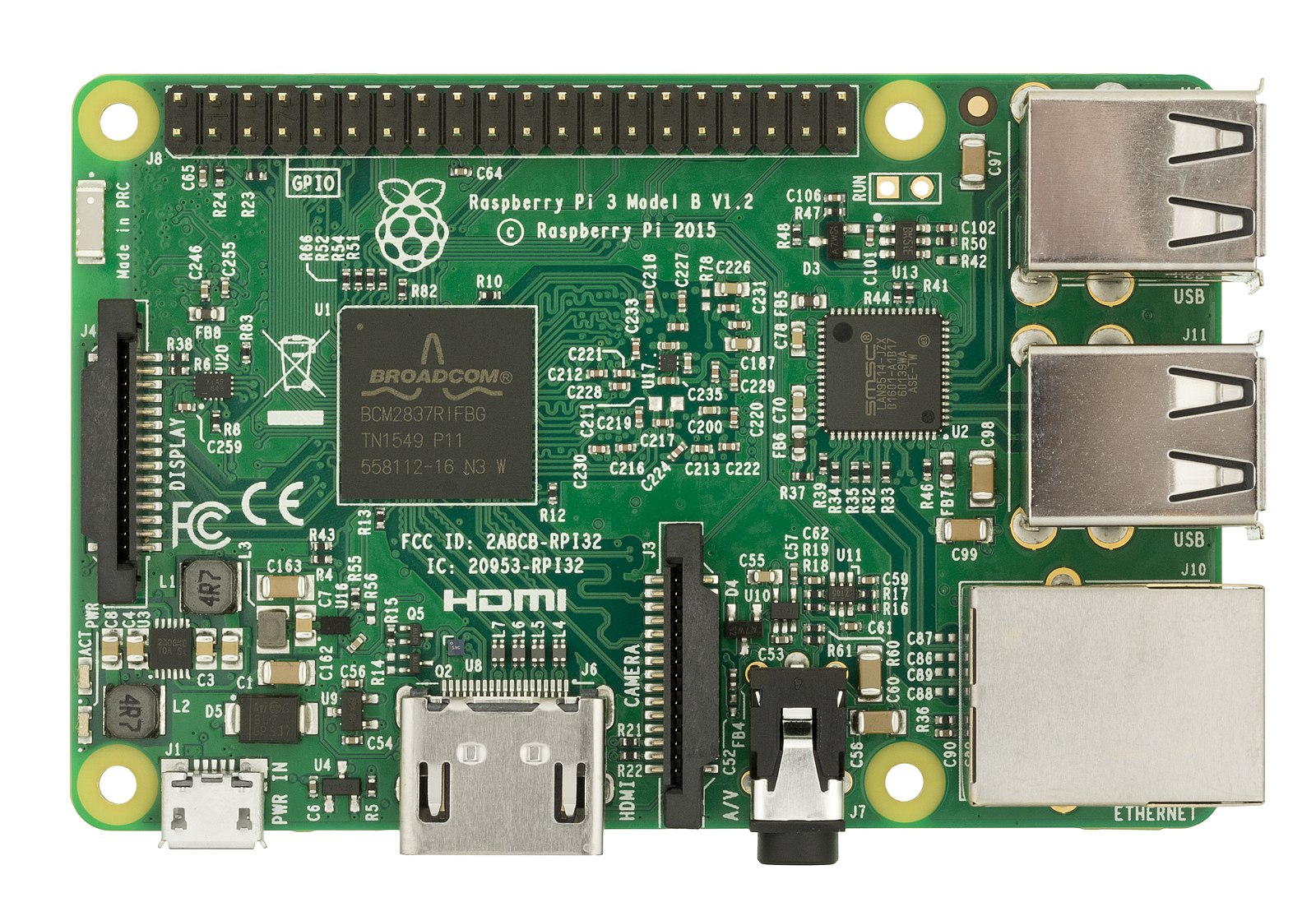

A completely different hobbyist architecture is the Raspberry Pi. It was designed to be used like a desktop or laptop computer, but with more access to its electronics. It uses an ARM-compatible processor, like most cell phones, but instead of running phone operating system software such as Android, it runs "real" computer operating systems. It ships with Linux, but people have run Windows on it.

The main thing that makes it exciting is that it's inexpensive: different models range in price from $5 to $35. That price includes just the circuit board, as in the picture, without a keyboard, display, mouse, power adapter, or a case. The main expense in kit computers is the display, so the Pi is designed to plug into your TV. You can buy kits that include a minimal case, a keyboard, and other important add-ons for around $20. You can also buy fancy cases to make it look like any other computer, with a display, for hundreds of dollars.

Because the Pi is intended for educational use, it comes with software, some of which is free for anyone, but some of which generally costs money for non-Pi computers. One important example is Mathematica, which costs over $200 for students (their cheapest price), but is included free on the Pi.

Like the Arduino, the Pi supports add-on circuit boards with things like sensors and wireless communication modules.

Raspberry Pi board

Image by Evan Amos, via Wikimedia, public domain

. In a lower-level language such as C or Java, the same idea would be written as:

. In a lower-level language such as C or Java, the same idea would be written as: