MF: lightly clean up to make the text more concise

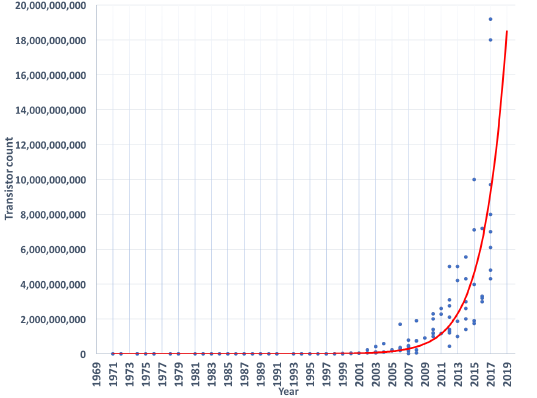

In 1965, Gordon Moore, one of the pioneers of integrated circuits, predicted that the number of transistors that could be fit on one chip would double every year. In 1975, he revised his estimate to doubling every two years. This prediction is known as Moore's Law.

Moore's Law is the prediction that the number of transistors that fit on one chip doubles every year.

It turns out that other important measurements have also shown roughly the same doubling behavior, such as processor speed and the amount of memory that fits in a computer. Doubling hardware speed improves the size of problems that you can efficiently handle.

The importance of Moore's Law isn't just that computers get bigger and faster over time; it's that engineers can predict how much bigger and faster, which helps them plan the software and hardware development projects to start today, for use five years from now.

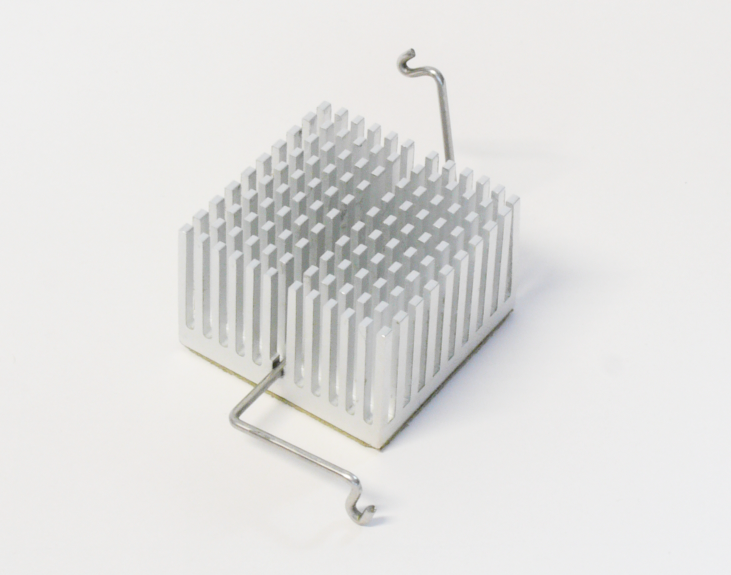

For transistor counts to keep growing, the size of a transistor must keep getting smaller. But chip density and processor speed have run up against an important limit: denser chips and faster signal processing both generate increased heat. Current technology is right at the edge of generating enough heat to melt the chips, destroying the computer. This is why processor chips are surrounded by metal heat sinks (one shown right), which conduct heat away from the chip and into the air.

Because of the heat problem, chip manufacturers have, at least temporarily, given up on making processors faster. Instead, they are putting more than one processor on a chip. If a computation can carry out the same algorithm on different parts of the data at the same time (in parallel—sort of like sprite clones all running the same script at the same time), then these multicore chips can have an effective speed much greater than the speed of a single processor. A computer you buy today is likely to have two or four processors on one chip. But using multicore efficiently requires that the software be written with multicore in mind.

Some time in the next decade, the size of a transistor will approach the size of an atom, but at the atomic scale, transistors won't work for various reasons. There is a more fundamental limit to the density of transistors on a chip than just heat issues. And similarly, there are fundamental limitations that affect the speed of a processor, such as the speed of electrons through a wire.

Moore's Law optimists argue that technologies other than transistors will become usable before manufacturers hit a fundamental limit. One such approach would involve using an individual electron to represent one bit. Electrons are smaller than atoms, so this technology would allow further dramatic increases in density. Another approach would use light beams rather than electric current to hold bits. But these developments are still far away.